This guest post was written by Denny Borsboom (dennyborsboom@gmail.com), University of Amsterdam, in response to a recent discussion on the equivalence of latent variable and network models.

Recently, an important set of equivalent representations of the Ising model was published by Joost Kruis and Gunter Maris in Scientific Reports. The paper constructs elegant representations of the Ising model probability distribution in terms of a network model (which consists of direct relations between observables), a latent variable model (which consists of relations between a latent variable and observables, in which the latent variable acts as a common cause), and a common effect model (which also consists of relations between a latent variable and observables, but here the latent variable acts as a common effect). The latter equivalence is a novel contribution to the literature and a quite surprising finding, because it means that a formative model can be statistically equivalent to a reflective model, which one may not immediately expect (do note that this equivalence need not maintain dimensionality, so a model with a single common effect may translate in a higher-dimensional latent variable model).

However, the equivalence between the ordinary (reflective) latent variable models and network models has been with us for a long time, and I therefore was rather surprised at some people’s reaction to the paper and the blog post that accompanies it. Namely, it appears that some think that (a) the fact that network structures can mimic reflective latent variables and vice versa is a recent discovery, that (b) somehow spells trouble for the network approach itself (because, well, what’s the difference?). The first of these claims is sufficiently wrong to go through the trouble of refuting it, if only to set straight the historical record; the second is sufficiently interesting to investigate it a little more deeply. Hence the following notes.

Timing

The equivalence between statistical network models (more specifically, random fields like the Ising model) and latent variable models (e.g., the IRT model) is not actually new. Peter Molenaar in fact suspected the equivalence as early as 2003, when he was still in Amsterdam, stating that “it always struck me that there appears to be a close connection between the basic expressions underlying item-response theory and the solutions of elementary lattice fields in statistical physics. For instance, there is almost a one-to-one formal correspondence of the solution of the Ising model (a lattice with nearest neighbor interaction between binary-valued sites; e.g., Kindermann et al. 1980, Chapter 1) and the Rasch model.” (see p. 82 of this book). Peter never provided a formal proof of his assertion, as far as I know, but clearly the idea that network models and other dynamical models bear a close relation to latent variable models was already in the air back then. I remember various lively discussions on the topic.

The connection between latent variables and networks got kick-started a few years later, when Han van der Maas walked into my office. At the time, he was thinking a lot about the relation between general intelligence and IQ subtest scores, as represented in Spearman’s famous g-factor model. In a conversation with biologists with whom he worked, Han had tried to explain what a factor model does and how it works statistically. Because they didn’t really get it, he had tried to use one of their own examples: lakes. Obviously, he said, the water quality in lakes is associated with various observables; for instance, the number of fish, the size of the algae population, the biodiversity of the lake’s ecosystem, the level of pollution, etc. Han explained that, in psychometrics, water quality would be thought of as a latent variable, which is measured through all of these indicators. He told me that the biologists had stared at him incredulously. No, they had answered, water quality is not really a latent variable. Rather, it is a description of a stable state of a complex system defined by the interactions between the observables in question. For instance, pollution can cause turbidity, which causes plants to die, which causes reduction of biodiversity, which allows the algae population to get out of hand, which increases turbidity, etc. (I am not a biologist, so if you really want to know what’s going on in shallow lakes, read this).

I vividly recall that Han sat in my room and said: couldn’t something like this be the case for general intelligence, too? That different cognitive attributes and processes, as measured by IQ-subtests (working memory, reading ability, general knowledge, etc.) influence each other’s development so as to create a positive manifold in the correlations between test scores? He drew arrows between boxes on a sheet of paper and held it up for me to see. I remember so well that I saw him do that. It was so simple but you could see that the ramifications were huge (although nobody at the time probably guessed just how huge). I said “I think that’s a really good idea”. He said: “Yes it is, isn’t it?!” and walked out with a smile. That drawing later became Figure 1b in Han’s mutualism model, eventually published in Psychological Review. The appendix to that paper formally proves that the mutualism model (which is basically a dynamical network model) can produce data that are exactly equivalent to the (hierarchical) factor model. That, I think, was the first real equivalence proof that was done in our group.

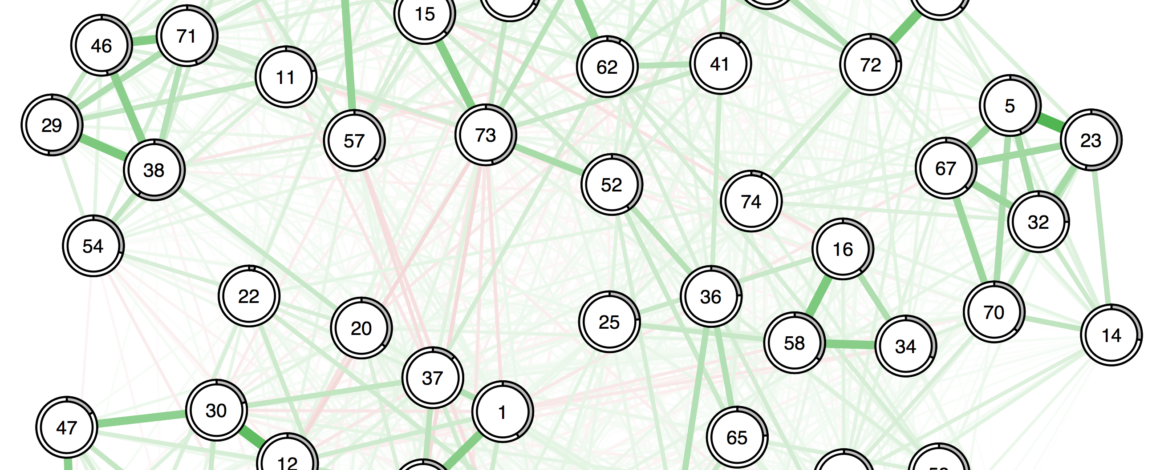

Essentially, this equivalence proof was what got the network approach going, because after many years of fruitless thinking about plausible causal mechanisms that would connect something like the g-factor to IQ or the internalizing-factor to insomnia, it suddenly appeared to us that networks could provide reasonable starting points for explaining correlation structures often observed in psychometrics in general, where the latent variable hypothesis provided very few believable stories. That’s why I decided to develop a general methodological framework around the idea that psychometric items can be profitably modeled using networks.

After starting our network research program in 2008, I played around with network models that we now know are in fact Ising models. I quickly found out that simulations from a network model for binary items produced data very close to IRT models, as would be expected from Peter Molenaar’s intuition and Van der Maas et al.’s proof. I gave several talks on this in various locations, include a keynote at a Rasch conference in 2010, where actual thunder broke after I said the Rasch model might be better thought of as a network (no coincidence, of course). However, I could never really show the formal equivalence between the network model and the latent variable model, partly because I lacked the mathematical skills and partly because I erroneously believed that I wasn’t simulating from an Ising model but from a closely related model.

That took a better mathematical mind: that of Gunter Maris, who was the first to really penetrate the nature of the correspondence between all of these models, and who, in a beautiful mathematical move, proved that they provide equivalent representations of the probability distribution of observables. I believe this happened in 2012, and consider this to be one of the main psychometric breakthroughs I have had the honor of witnessing. I expect it to have lasting effects on the psychometric landscape – we are merely at the beginning of exploiting the connection that this equivalence opens up: a secret tunnel that allows us to travel back and forth between a century of statistical physics literature and a century of psychometrics. The equivalence has now been written down in this chapter by Sacha Epskamp, which was written in 2014, in this paper by Maarten Marsman from around the same time, and of course, most recently, in the Kruis and Maris’ work. Also, Maarten Marsman has a forthcoming paper that extends the equivalence to a whole range of other models.

Implications

I have noted that some people think that, because there exists an equivalent latent variable model for each network model and vice versa, networks are equivalent to latent variables in general. This is erroneous. That one can always come up with some equivalent latent variable structure to match any network structure (and vice versa) doesn’t mean everything is equivalent to everything else. Care should be taken in distinguishing a statistical model, which describes the probability distribution of a given dataset gathered in a particular way, from the theoretical model that motivates it, which describes not only the probability distribution of this particular dataset, but also that of many other potential datasets that would, for instance, arise upon various manipulations of the system or upon different schemes of gathering data.

This all sounds highly theoretical and abstract, so it is useful to consider some examples. For instance, network structures that project from plausible latent variable models (e.g., scores on working memory items that really all depend on a common psychological process) can be (and as a rule are) highly implausible from a substantive theoretical viewpoint. Your ability to recall the digit span series “1,4,6,3,7,3,5” really doesn’t influence your ability to answer the series “9,3,6,5,7,2,4”; instead, both items depend on the same attribute, namely your memory capacity. This indicates that the theoretical model (both item scores depend causally on memory capacity) is more general, and thus different in meaning, from the statistical model (the joint probability distribution of the items can be factored as specified by an IRT model). Here, the statistical latent variable model is equivalent to a network model, but the theoretical model in terms of a common cause – memory capacity – is not.

Likewise, latent variable structures that project from networks can be highly implausible too. For example, an edge in a network between “losing one’s spouse” and “feelings of loneliness” can be statistically represented by a latent variable, as is true for any connected clique of observables. But from an explanatory standpoint, the associated explanation of the correlation in terms of a latent common cause makes no sense whatsoever. It is rather more likely that losing one’s spouse causes feelings of loneliness directly. Again, the difference in meaning between the theoretical model (losing one’s spouse causes feelings of loneliness) and the statistical model (the correlation between these variables remains nonzero after partialling out any other set of variables in the data) lies in the greater generality of the theoretical model, which extends to cases we haven’t observed (e.g., what would have happened if person i’s spouse had not deceased), cases in which we had used different observational protocols (e.g., observing the population register instead of administrating a questionnaire item on whether i’s spouse had deceased), or cases in which we would causally manipulate relevant variables. Statistical models by themselves do not allow for such generalizations (that is in fact one of the reasons that theories are so immensely useful).

Also, at the level of the complete model, the implications of latent variable models can differ greatly from those of network models. For instance, the model proposed for depression in Angélique Cramer’s recent paper is equivalent to some latent variable model, but not, as far as I know, to any of the latent variable models that have been proposed in the literature on depression. In general, if one has two competing theoretical models, one of which is a latent variable model with its structure fixed by theory, and the other of which is a network model with its structure also fixed, it will be possible to distinguish between these because the latent variable model equivalent to the postulated network model is not the same as the latent variable model suggested by the latent variable theory; Riet van Bork is currently working on ways to disentangle such cases.

Finally, even though latent variable models and network models may offer equivalent descriptions of a given dataset, they often predict very different behavior in a dynamic sense. For example, the network model for depression symptoms, in certain areas of the parameter space, behaves in a strongly nonlinear fashion (featuring sudden jumps between healthy and depressed states), while the most standard IRT model should show smooth and relatively continuous behavior. Relatedly, the network model predicts that sudden transitions between states should be announced by warning signals like an increase in the predictability of the system prior to the jump (critical slowing down, for which recent work has provided some preliminary evidence). There is no reason to expect that sort of thing to happen under the latent variable model that is equivalent to the network model, as estimated from the data, that was used to simulate from to get at these predictions.

So, the fact that two models are statistically equivalent with respect to a set of correlational data, does not render them equivalent in a general theoretical sense. One could say that while the data-models are equivalent, the theories that motivate them are not. This is why it is in fact not so difficult to come up with very simple data extensions that allow one to discriminate between observationally equivalent network and latent variable models. For example, in a latent variable model effects of external variables are naturally conceived of as going through the latent variable (e.g. genetic and environmental effects on phenotypic variation in behavioral traits, or life events that can trigger depressed episodes), whereas in network explanations these propagate through a network. This means that the latent variable model predicts the effect to be expressed proportional to the factor loadings (so the model should be measurement invariant over the levels of the external effect) while the network model predicts the external effect to propagate over the topology of the network (so the effect should be smaller on variables more distant from the place where the external effect impinges on the network).

Also, if experimental interventions are available, it should be reasonably easy to discriminate between latent variable models and network models, because in a latent variable model intervening on the observables is causally inefficient with respect to other observables in the model. This is because (in standard models) effects cannot travel from indicators to the latent variable, so they cannot propagate. However, in a network model, experimental manipulations of observables should be able to shake the system as a whole, insofar as the observables are causally connected in a network. So statistical equivalence under passive observation does not mean semantic or theoretical equivalence in general (see also Keith Markus’ interesting conclusion that (statistical) equivalence rarely implies theoretical (semantic) equivalence, but rather that statements to the effect that two models are statistically equivalent, as a rule, suggest that the models are not identical).

Update, March 29 2018: Different scientific models can have equivalent observational consequences. In statistics, this is known as statistical equivalence; in the philosophy of science, underdetermination of theory by data. This is often hard to explain and I know few good illustrations that go beyond Wittgenstein’s duckrabbit. This GIF is a really nice illustration – and beautiful too.

Pingback: How theoretically very distinct mechanisms can generate identical observations | psychosystems.org blog

Pingback: R tutorial: how to identify communities of items in networks | Psych Networks

Nice! But how about measurement error? In reflective models you estimate items’ idiosyncracies as error variance. In formative models, I think, you assume items are measured without error.

It reminds me those endless discussions about similarities and differences between PCA and EFA:-)

I think it also opens up links between networks and Partial Least Squares, canonical correlation analysis, etc., don’t you think?

Pingback: Network models do not replicate ... not. | Psych Networks

Pingback: A summary of my academic year 2017 – Eiko Fried

Pingback: Estimating psychological networks via Information Filtering Networks | Psych Networks

Pingback: Fixed-margin sampling & networks: New commentary on network replicability | Psych Networks

Pingback: Deeper & Discerning Perception

The GIF caught my eye in some social media feed of mine.

I understand that this post is about something else, and I caught the gist of it. But the GIF brought to my mind such concepts as Plato’s allegory of the cave [https://en.wikipedia.org/wiki/Allegory_of_the_Cave], constructivism [https://en.wikipedia.org/wiki/Constructivism_(psychological_school)], or Leary’s reality tunnel [https://en.wikipedia.org/wiki/Reality_tunnel].

All of these concepts have in common that different individuals perceive and interpret the objective reality differently. Between individuals, parts of their perception and interpretations may overlap but are never fully identical.

The GIF makes for a good visualization of this basic idea too I think.

Pingback: R tutorial: clique percolation to detect communities in networks | Psych Networks

Is Denny Borsboom the inventor and creator of the animated GIF? It is very nice.

No, Denny is not the inventor of the gif. Someone on Twitter claimed authorship once (I believe in 2018) when I posted it there, but I didn’t verify that.